How I Built a BI Platform from Scratch without committing suicide ! (Part 3)

OPTIMISATION LIFECYCLE

Table of contents

A DEVELOPER? KESAKO?🤔

Let's talk seriously.

It's all very nice. It's great to see how much technology has evolved to get to this point. This speed, these frameworks to do everything etc..

But it all starts with the basics: the BASICS. I noticed that nowadays this same beauty of the technos which make the race to the most open or to the one which the shortest learning curve, made that full of beginner or newcomer rushed towards this facility forgetting of passage what it is really develop.

Coding is easy. Everybody is able to memorize code or to make a machine say "1+1 = 2". But DEVELOPING is another thing, a science, a philosophy, a state of mind.

Developing is not following a 10 minutes tutorial on how to make a todo list with react and reproduce it. It's not reading and memorizing all the books on Design Patterns, clean code, data structures...

Being a developer is not knowing algorithms but it is Algorithming. It is not to master all the technos but it is to know how to answer efficiently and quickly to a need, a problem by taking into account the context and the secondary factors with the adequate resources.

Finally, it is knowing how to create if it doesn't exist because a framework is above all a programming language which itself is a set of programming paradigms. In some it is better to understand how the framework is created than to be a simple tenant.

Before talking about optimization, caching, load-balancing, Vertical/Horizontal Scaling we need to talk about algo and language.

SELF OPTIMIZATION

As a dev we have to touch a lot of technologies, but nothing prevents us from specializing in a language of our choice that will represent our algorithmic base. Like Jeff Delaney better known on the #fireship channel, I learned a lot of technologies by myself.

But I specialized on JS because I wanted to and it was a very broad universe.

Just before starting this project, I had the very good idea to start JS from the base to go back even if I had been using it for several years with these frameworks. And I was surprised!

Surprised to see that I KNEW NOTHING!

It was as if there was JS which was the real techno and I since all this time I was doing some things very similar but not the WildScript.

Rediscovering the base language that my project was built on allowed me to not only write more and more logical code but also more and more efficient.

Re-learning asynchronous logic, RxJs, DataStructures allowed me not to multiply but to exponentially increase the speed of execution of some processes. And even my computer was happier: We became Potes again 🥳

Design Patterns, architectures and all the methodologies that we spend our days reading, ended up coming in naturally, depending on the problems I encountered but what will never come naturally is a good Algorithmic/Logic. That we cultivate!

CODE OPTIMIZATION

There are a ton of ways to optimize a code. But in all cases it is done progressively.

You can't have an urgent request with a tight deadline and think you're going to be a hero by writing perfectly clean code. If you persist in doing so, it is very likely that your office chair will be perfectly clean for the next few months and your bank account will be too.

Warning. 🙄 I see you coming this doesn't mean you shouldn't write readable and logical code but just that the fact that it is clean, organized and optimized this is done gradually after the logic of the requested feature has already been implemented.

USE THE METHODS OF THE LANGUAGE

Each language comes with its own innumerable embedded functions. These have often been made taking into account all performance aspects. They are your best friends to avoid downloading a lot of useless dependencies or creating another mess that will kill the next developer to look at it.

As far as Javascript is concerned, the 'Built-in Functions' (such as methods on arrays, etc) are your best friends. It's very unlikely that you'll need anything that hasn't already been done.

BE ASYNC!

Asynchronous coding is widely used in Node.js to ensure a non-blocking operational flow. Asynchronous I/O allows other processing to continue even before the first transmission is complete. Synchronous coding can potentially slow down your application. It uses blocking operations that could block your main thread, which will dramatically reduce the performance of your App.

The impact is huge! This is sometimes referred to as a Big O notation in "O(log n)".

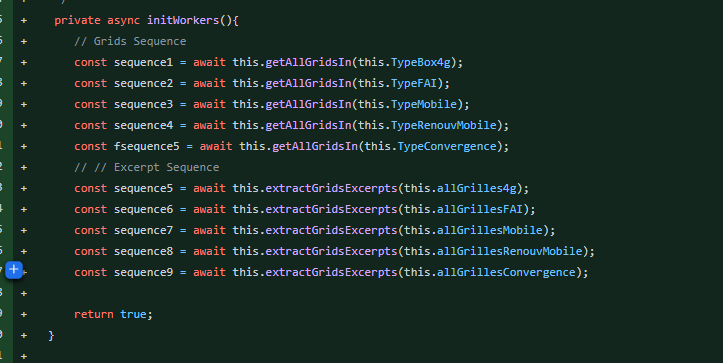

Example: Below we had a function that allowed us to sequence operations. The problem is that each line here waits for the previous one before starting.

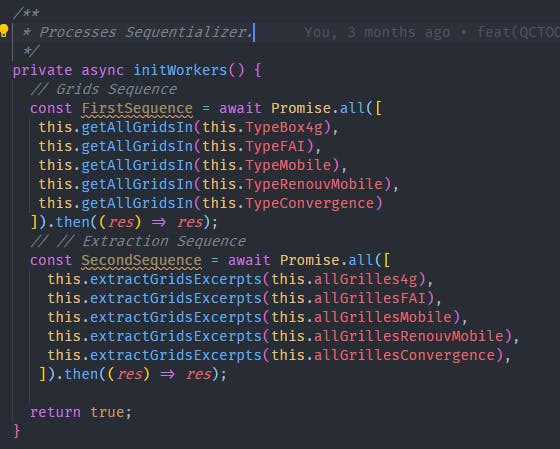

After a little optimization we have :

I know 😑 The result is not returned for some reasons, IT'S NOT THE POINT.

Above we execute the lines of the first sequence simultaneously which allows us to divide by 4 the waiting time. Note the elegance of the "Promise.all() " 😍JS is really...🥰

Now imagine doing this wherever possible. The speed of the system is felt on all modules. For example I have some rules that always force me on the fly to think and write more or less optimized code. Like:

"I would always avoid to browse a table completely *"

I would always check if something doesn't exist before doing it.

"I don't need a comment if the code speaks for itself"

Etc... It may be silly but that's what I always get away with. (Almost 😂).

In comparison, we used to have to wait 8 to 10 minutes to see a graph on the production tracker. Today it only takes us ~200ms if we disable the REDIS cache because with the cache the same request takes ~4ms.

AVOID MEMORY LEAKS

Unsubscribe your Subscribers !!!!

I spent two weeks running behind a general slowness in my frontend so I recommend this nugget that, if you use Angular, will solve all your worries: Avoid Memory Leaks in Angular The lifetime of some global components, such as AppComponent, is the same as the lifetime of the app itself. If we know...dev.to

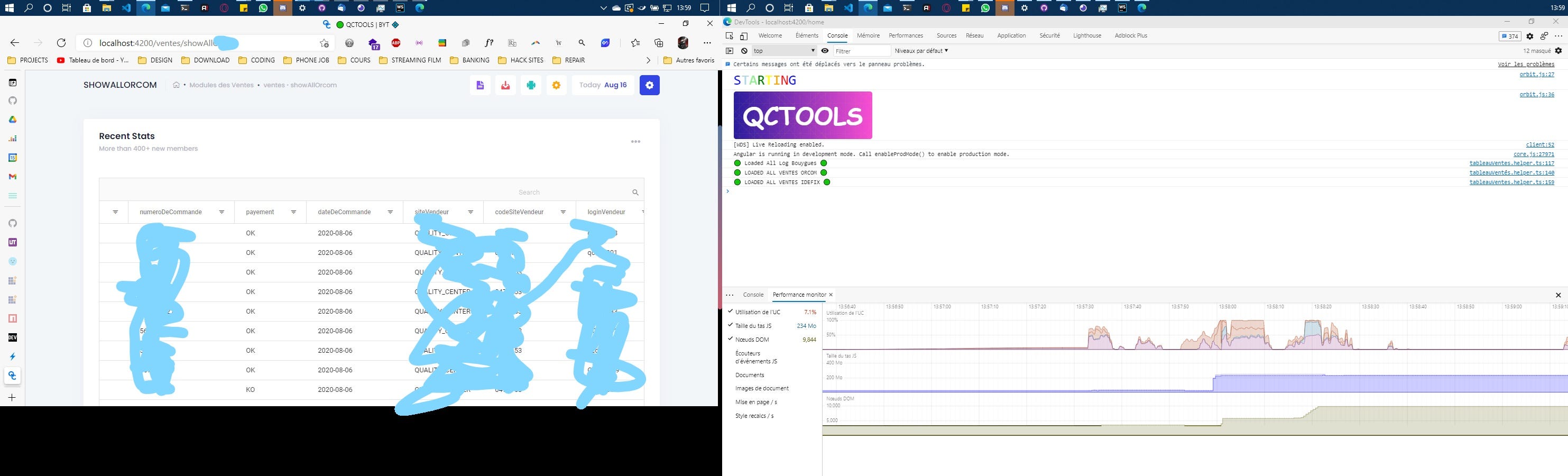

I won't even explain what I went through. Here is what it looks like in pictures:

PERF MONITOR Dealing With Memory Leaks.

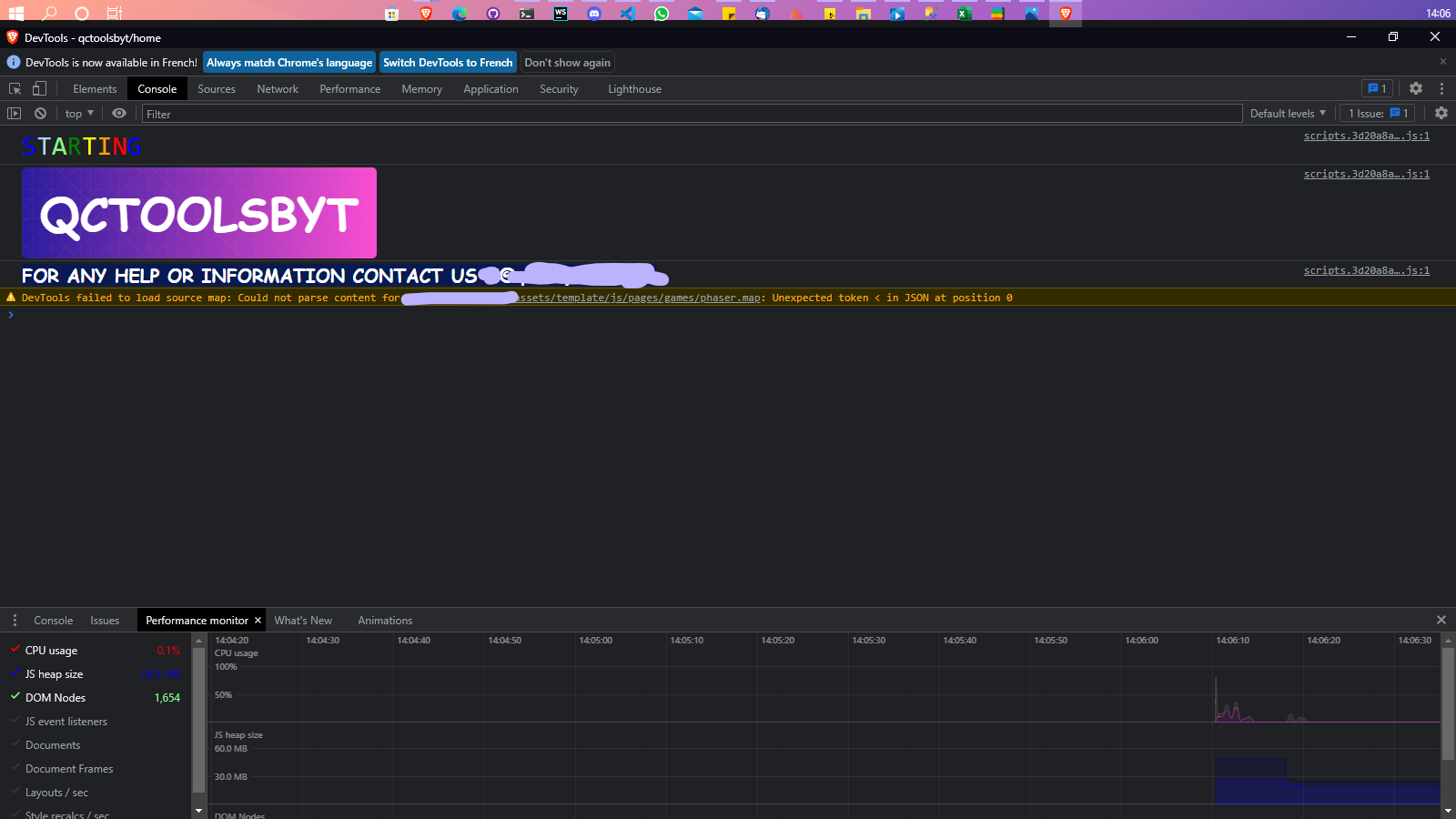

And this is what the Perf monitor should look like in normal time:

In our case "the Unsub " is done automatically by a helper. If you want to avoid this kind of thing better use the async pipe on your Comp.HTML.

Once you have mastered your development workflow and optimized your code as much as possible, it is time to cache some processing or data.

ELIMINATE WAIT TIMES WITH A CACHE!

Server-side caching is one of the most common strategies for improving the performance of a Web application. Its main objective is to increase the speed of data retrieval, either by spending less time computing that data or performing I/O (such as retrieving that data from the network or from a database).

A cache is a high-speed storage layer used as temporary storage for frequently accessed data. You don't need to retrieve data from the main (usually much slower) source of data on every request.

Caching is most effective for data that doesn't change very often. If your application receives many requests for the same unchanged data, storing it in a cache will greatly improve the responsiveness of those requests. You can also store the results of computationally intensive tasks in the cache, as long as it can be reused for other requests. This prevents server resources from getting bogged down unnecessarily by repeating the work of computing this data.

Another common candidate for caching is API requests that go to an external system. Let's assume that the responses can be reliably reused for subsequent requests. In this case, it makes sense to store API requests in the cache layer to avoid the extra network demand and any other costs associated with the API in question.

At this point I was no longer alone I had another great mind with me and we chose to go on due REDIS.

Its integration with NestJS was super easy and we had all the resources to set it up. From day one we were amazed at the performance gain we had just had. We even thought we were rivals to Google because we were riding the speed vibe.

We were now talking about less than 7ms! We spent days celebrating that. 😂😂😂😂

On the front end, caching on the browser side was already doing the job, then limiting, cleaning up our "bundle Size" and managing the Memory Leak allowed us to have no more UI latency.

SCALING

As I said earlier, we deployed first before continuing our builds and deliveries. This very DevOps oriented workflow allowed us early on to think about solutions for future scaling of our application.

Although we had all the resources we wanted, I always refused to use more than I needed. This means that throughout its existence. The application (Front & Back) was deployed in one of our weakest servers.

A machine with 4 Giga RAM, an HDD and an i7 processor. All that is commonplace.

This was the best way to force us to have a good architecture and good optimized code.

Especially since in this server we also find other applications such as an Internal Project Management that I set up and that manages all the IT team (I'll talk about it in another article), the attendance sheets, projects/tasks, as well as the Helpdesk and the leave requests; a MySQL server used by some applications but also for our tests. YES! 😂

However, the more modules there were, the better the platform became because we always applied the BoyScout rule.

On the CI/CD side, whether it is for the front or the back, we have quite a few scripts executed at the time of the BUILD which allow, among other things, to: Versioning the application, Copy or move the contents of the "dist" to the deployment folder, Update the GIT & GITHUB, and other things done in PostBuild.

HORIZONTAL SCALING

Since the beginning of this article you haven't seen anywhere where I set up a LoadBalancer or Docker.🧐 Is my platform affected? NO!

It's all about context. You can't do everything at once or master everything at once. I have a ton of tech that I don't master and yet that doesn't stop me from doing things that work well.

We used NGINX as our web server and reverse proxy because it was the most adequate and efficient for applications running on NodeJS. Implementing a load-balancer would not take us more time once the platform was docked.

However from the beginning I wanted to isolate and monitor my applications running on nodeJS without having to configure Docker for each of them.

Once again I discovered PM2!

PM2 is a "process manager" that allows you to do a lot of things with a node application. I leave you the pleasure to go and see by yourself.

But basically, it is useful to use a process manager to :

Restart the application automatically if it crashes.

Get information about execution performance and resource consumption.

Dynamically change settings to improve performance.

Control clustering.

A process manager is a bit like an application server: it is a "container" for applications that facilitates deployment, provides high availability and allows you to manage the application at runtime.

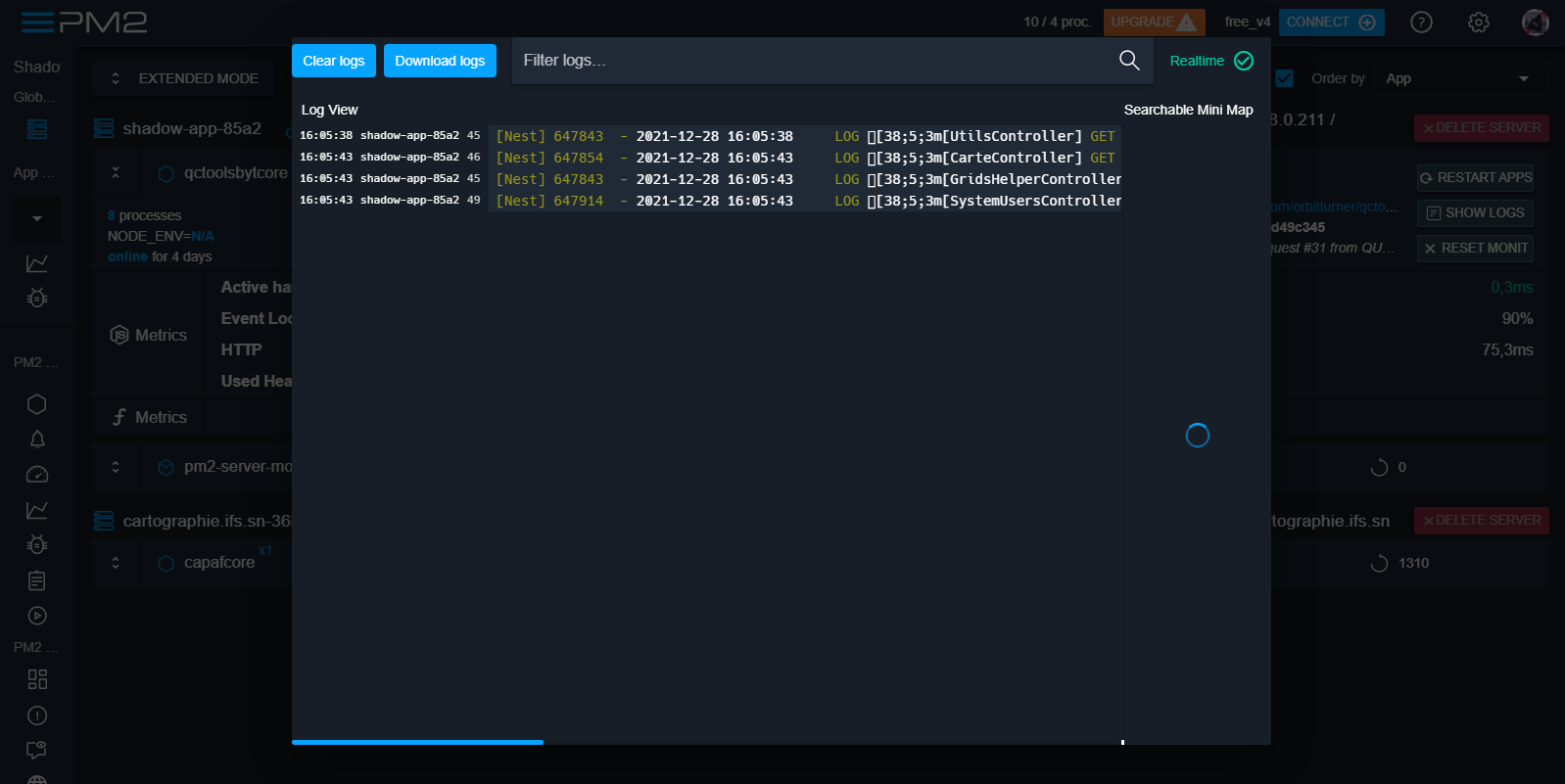

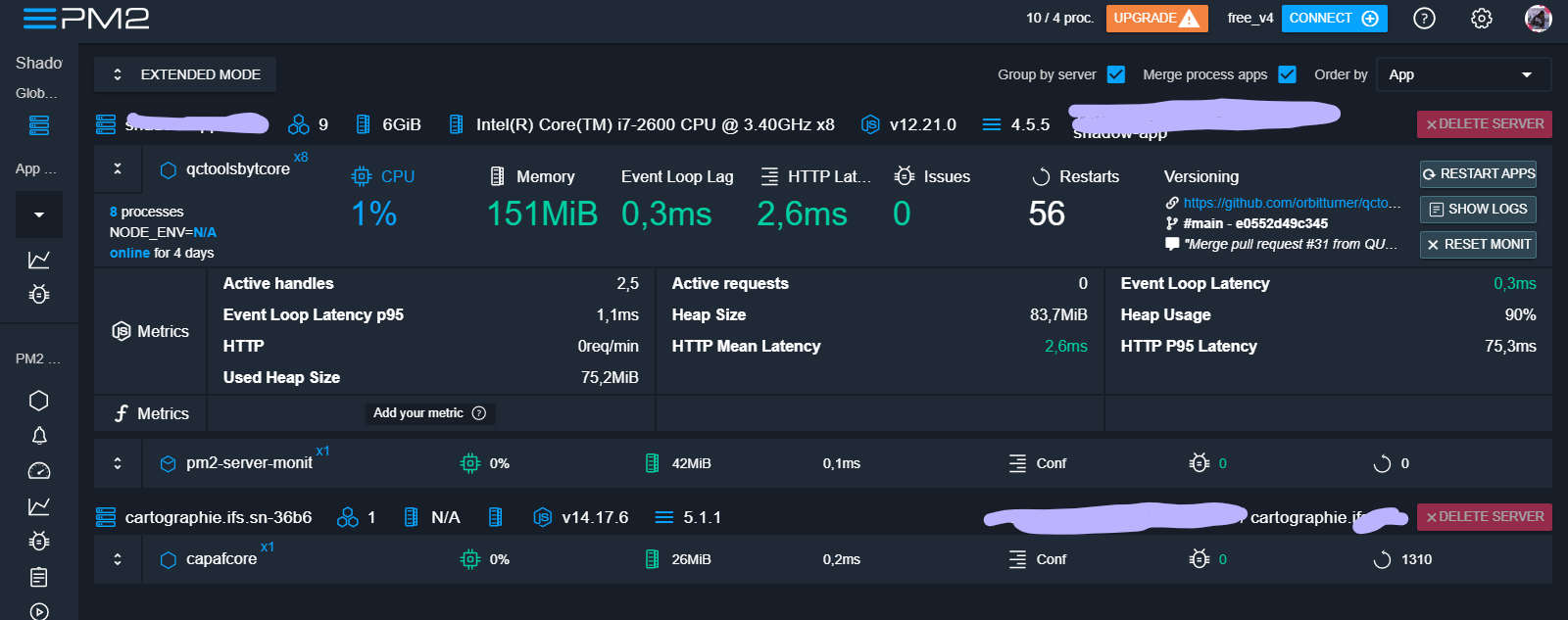

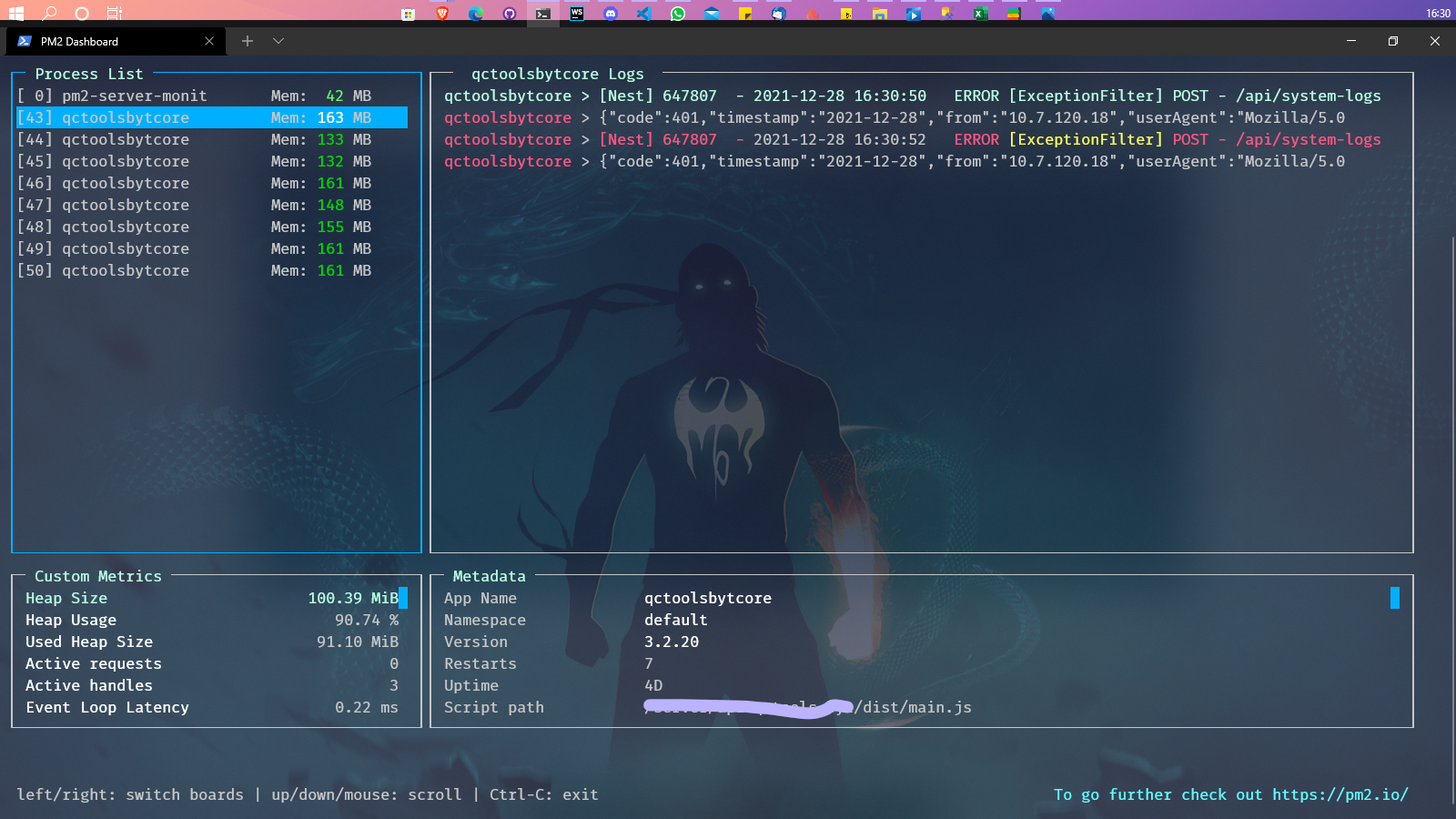

Once all the configuration was done on PM2 we were able to have in time on console or online precise info on the platform and its usage and deduce actions from it.

Example :

But the most incredible thing is the clustering.

WHAT IS HORIZONTAL SCALING ANYWAY?

It's the ability to increase server capacity by connecting multiple hardware or software entities so that they function as a single logical unit. H-Scaling can be achieved using clustering, distributed file system and Load Balancing.

An instance of Node.js runs in a single thread, which means that on a multi-core system (which most computers are these days), not all cores will be used by the application. To take advantage of the other available cores, you can run a cluster of Node.js processes and distribute the load between them.

Having multiple threads to handle requests improves the throughput (requests/second) of your server because multiple clients can be served simultaneously. We distribute the request load over several instances of our application.

This can be more or less easy to implement in an app that has just started but in our case it was at the last moment that we wanted to do it. Don't ask me why 😫 That's life! That's how it is! Facebook too at the beginning was crap.👨🏾🦯

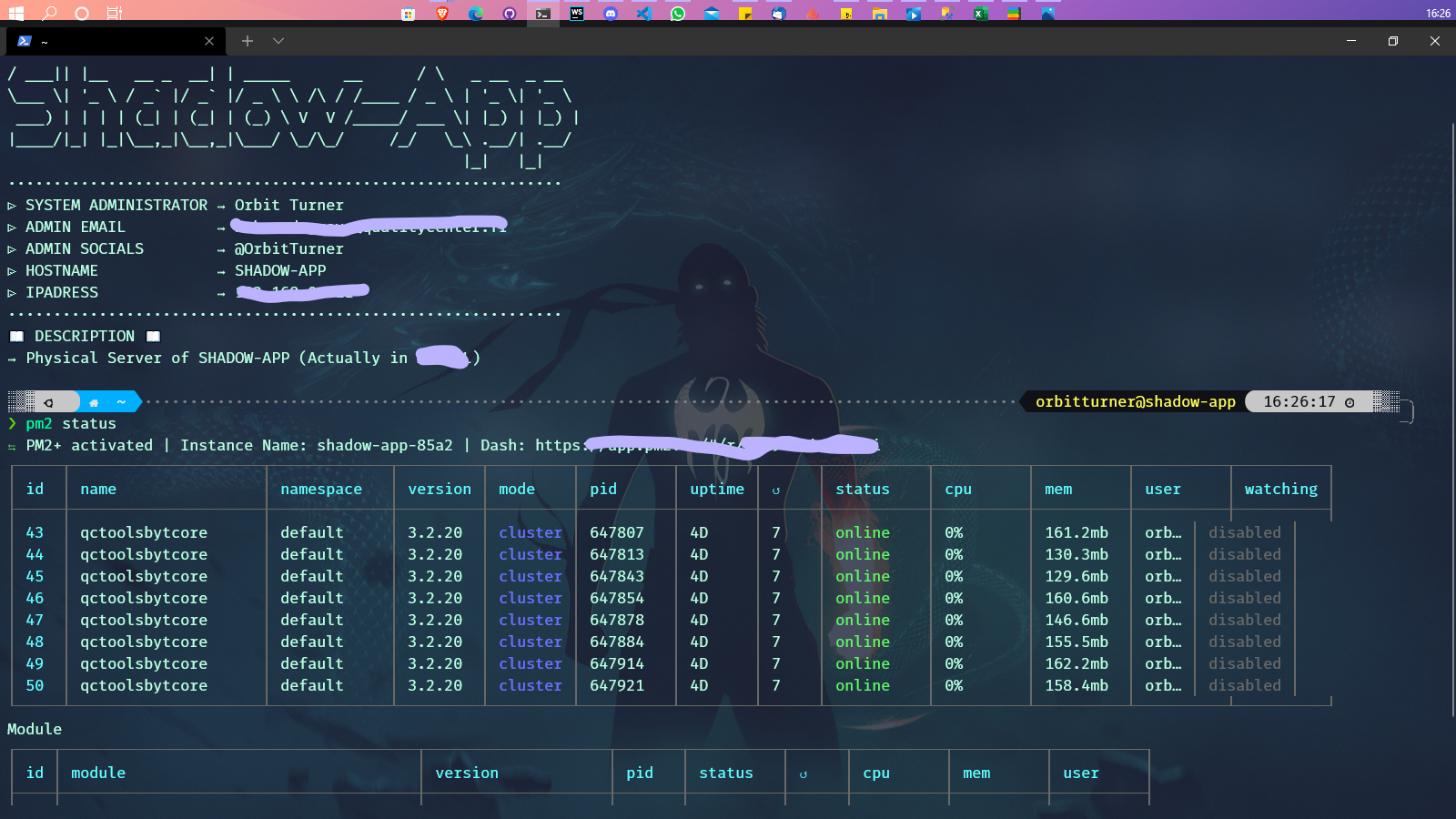

We were too lazy to touch up our modules and/or our whole app. That's when PM2 pulled out another card for us because of CLUSTERING he is able to do it on already made app. And how?

Just like that! 🥳 It duplicates your process and manages the balancing itself like a big guy! 🔥✨

That's not all with each new deployment PM2 reloads and updates the instances One by One while making sure to have no downtime!

LIST OF ALL RUNNING INSTANCES on Terminal.

If you want to know more about how to configure a clustering with PM2 I advise you this article : Improving Node.js Application Performance With Clustering | AppSignal Blog When building a production application, you are usually on the lookout for ways to optimize its performance while...blog.appsignal.com

Async vs MultiThread

It is a general misconception that asynchronous programming and multithreading are the same. But they are not. Asynchronous programming is about the asynchronous sequence of tasks, while Multithreading is multiple threads or processes running in parallel.

Multhreading is a way of asynchronous programming, but we can also have asynchronous tasks on a single thread.

Multhreading acts at the processor level while asynchronous is at the program operation level.

FINALLY, FOLLOW-UP & MONITORING

Before trying to improve the performance of a system, it is necessary to measure the current performance level. This way, you will know the inefficiencies and the right strategy to adopt to get the desired results. Evaluating the current level of performance of an application may require performing different types of tests, such as the following:

Load testing: refers to the practice of simulating the expected use of a system and measuring its response as the workload increases.

Stress testing: designed to measure the performance of a system beyond the limits of normal working conditions. Its purpose is to determine how much the system can handle before it fails and how it attempts to recover from a failure.

Spike testing: tests the behavior of an application when it receives a drastic increase or decrease in load.

Scalability testing: used to find the point at which the application stops scaling and identify the reasons behind it.

Volume Test: determines if a system can handle large amounts of data.

Endurance testing: Evaluates the behavior of a software application under sustained load for a long period of time to detect problems such as memory leaks.

Running some or all of the above tests will provide you with several important metrics, such as:

response time

average latency

error rate

requests per second

Processor and memory usage concurrent users and more.

After implementing a specific optimization, be sure to run the tests again to verify that your changes have had the desired effect on system performance.

It is also important to use an application performance monitoring (APM) tool to keep an eye on the long-term performance of a system. Various monitoring solutions can take care of this for you.

Also monitoring users and their actions in the case of an enterprise product helps to prevent fraud and fraudulent actions and to clear the responsibility or not of those who are targeted.

Today day after day we continue to face challenges and always respond to equally crazy requests! But we have no fear about the evolution and rigidity of our application. We still have a lot to perfect and implement but we do it with pride and wisdom.

It is not because of my talent or genius that this platform is where it is today, NO. It's because I was lucky enough to be able to set boundaries. To have known that it was necessary to lay small stones end to end to have this structure.

I was also lucky to have a CIO with an incredible mindset who allowed me to test what I had in mind. Who knew how to tolerate my mistakes and who allowed me to evolve.

But I also owe a lot to what we developers often forget, the SOFTSKILLS. To be a developer is also to have an intelligence of the situations and a certain tact which makes it possible to seize the opportunities where there is some and to make an impact in the head and the heart of people.

**Because at the end of the day, we make software for humans and not just for users.

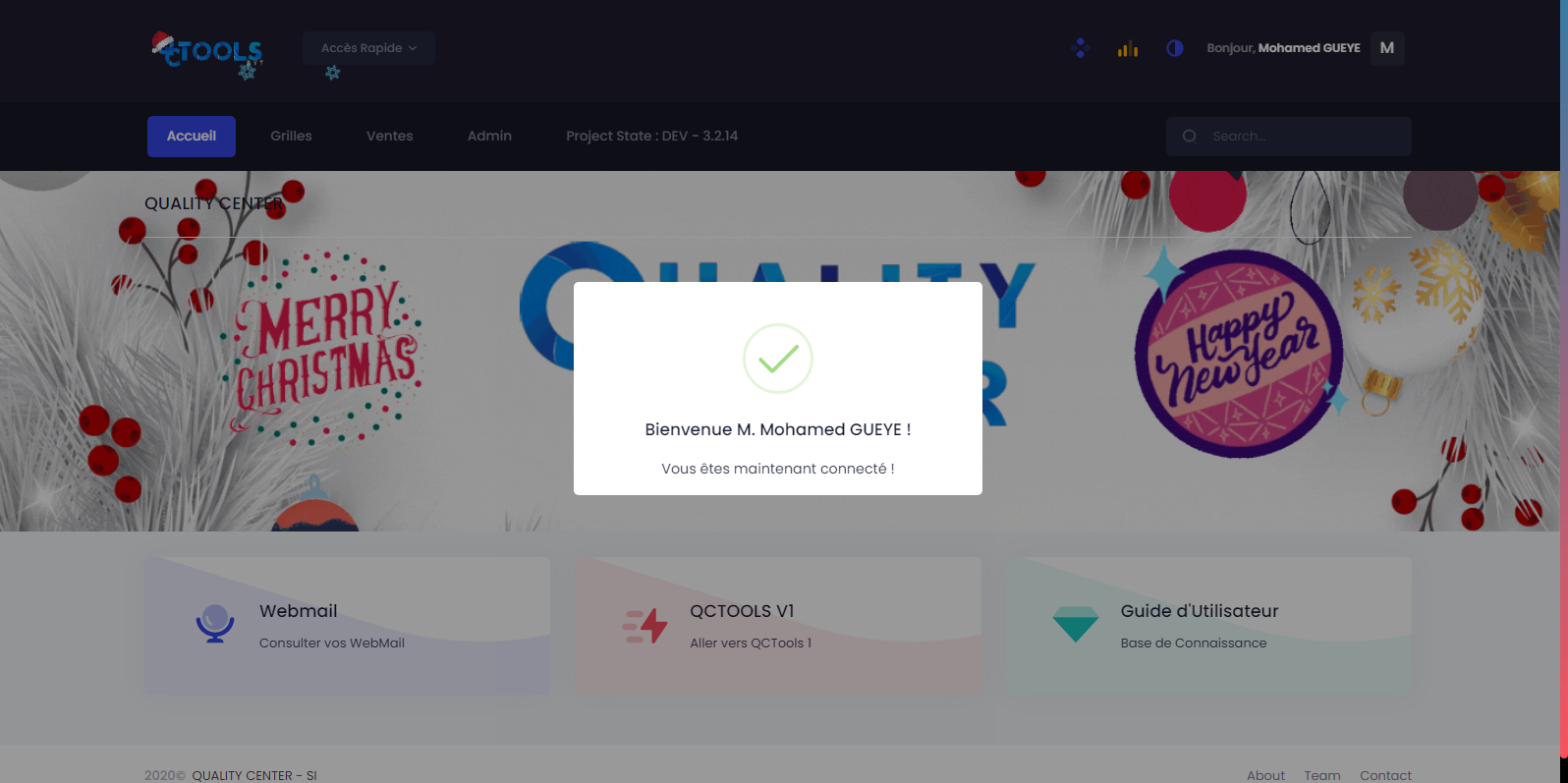

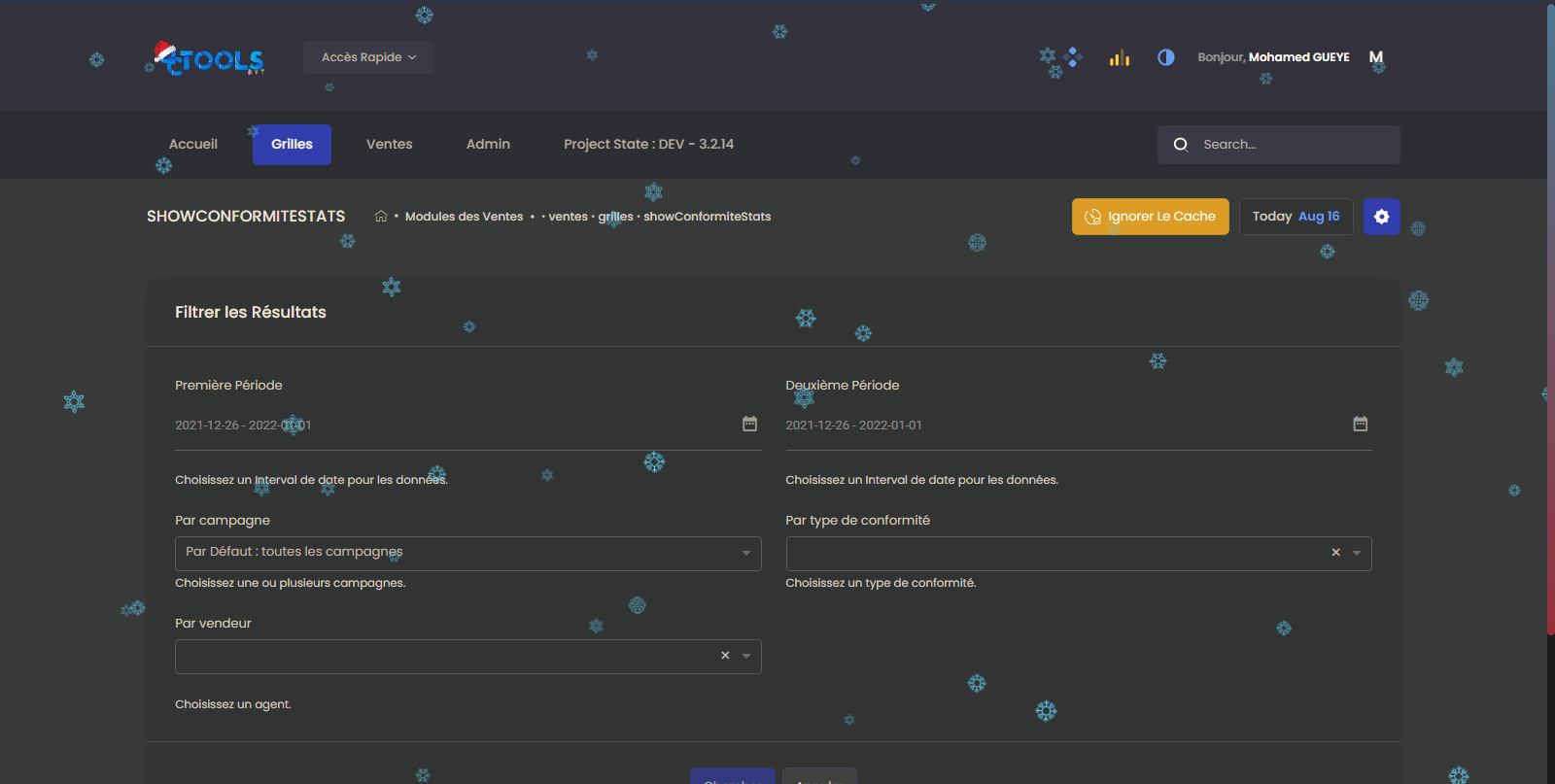

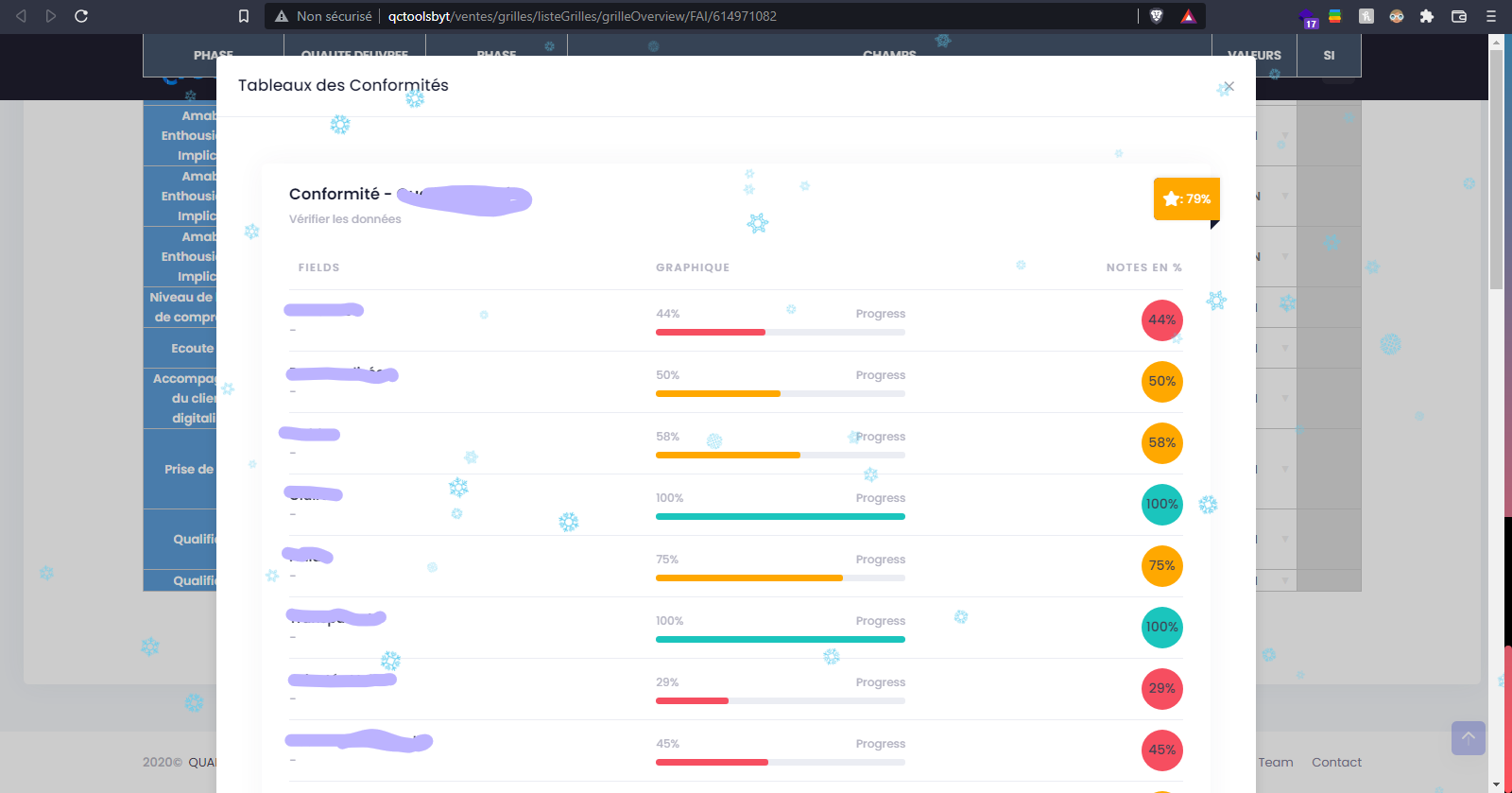

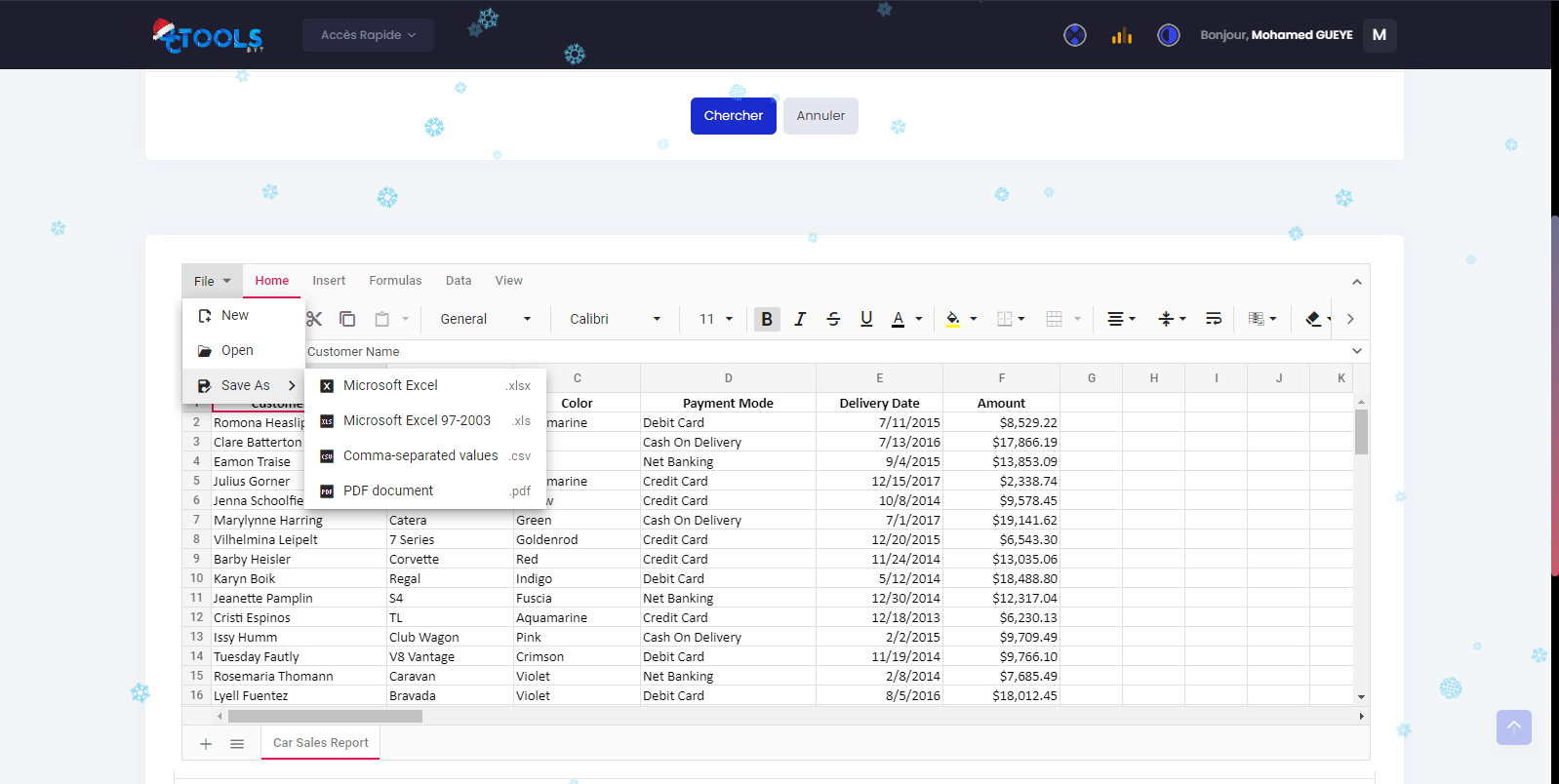

SOME SCREENSHOT OF THE PLATFORM

Due to a NDA and such I will not show you a full version of the application and other features. Nevertheless I'm open for any further questions.

Users receive nice animated mails when an important action is done on their account (reset, block, register):

Many modules require data filling or complex table creation so we have implemented a Grids component that allows you to have either an element with all the Excel features or a full-fledged alternative to Excel:

...

FIND ME ON MY WEBSITE: 🚀 ORBIT WORLD ⍟ Keep Going Further 🚀 What does it look like working with me or futurize? Discuss the project The customer at the center of the project! I...orbitturner.com